Software-Defined Networking scenarios are typically defined as a sandbox or testbed so as to assess SDN controllers or new applications. Most of the times, this is defined in a virtual fashion, virtualising the physical infrastructure. This document focuses instead of real hardware deployment.

The emulation of a physical infrastructure is a common procedure that allows deploying a network easily and fast; defining its topology, size and capabilities. The Mininet platform and OVS devices are excellent candidates to instantly boot topologies for local deployments or testing.

On the other side of the spectrum, the physical equipment is used to provide production-like service to users. That is, replacing the virtual network with a set of physical hardware. The definition and wiring of such a physical infrastructure is therefore more complex than its virtual counterpart.

Stating the intention

Before even starting with the design of the physical testbed, multiple questions must be answered. Some of these are as follows:

- Purpose: is it a testbed devoted to internal, one-user tests; or will it accommodate multiple users (each one with its “network slice”)?

- Availability: should the testbed support high loads of traffic and be operative no matter what?

- Operation modes: what will the testbed be doing? Connecting to the controller? Forwarding traffic? Provide management access? Interconnecting to others?

- Traffic isolation: what are the options for the users of the testbed in order to isolate their traffic, and how that will be achieved?

The purpose, availability and need for traffic isolation do have a direct impact on the configuration of the network devices.

Depending on the purpose, the devices may point to either any general SDN controller or to an intermediate layer that acts as a multiplexer across multiple users. The common procedure deals with the configuration of the devices to point to any chosen SDN controller. In this way, the controller instance will have access to the whole underlying physical infrastructure. A more advanced set-up, and common requirement for service providers, is to “slice” or isolate different sections of the network in order to ensure proper separation of the traffic generated by each user. There are multiple ways to face this scenario, depending on the degree of control the user should have on its traffic: if the user can dynamically define and change its traffic, an intermediate layer or proxy could keep an abstraction and mapping between the user’s traffic and the devices, ports and matching/slicing conditions; otherwise the user can be provided with, or more rarely allowed to initially define, a very tailored section of the network from the beginning. This is of course all very specific to the set-up of the infrastructure, and there’s not a straightforward solution for specific needs.

The requirements on availability and the desired traffic isolation will require to configure several parameters on the devices.

Finally, the operation modes are directly related to the wiring. That is, depending on the interconnection between the devices, its controller and any external network.

Designing the topology

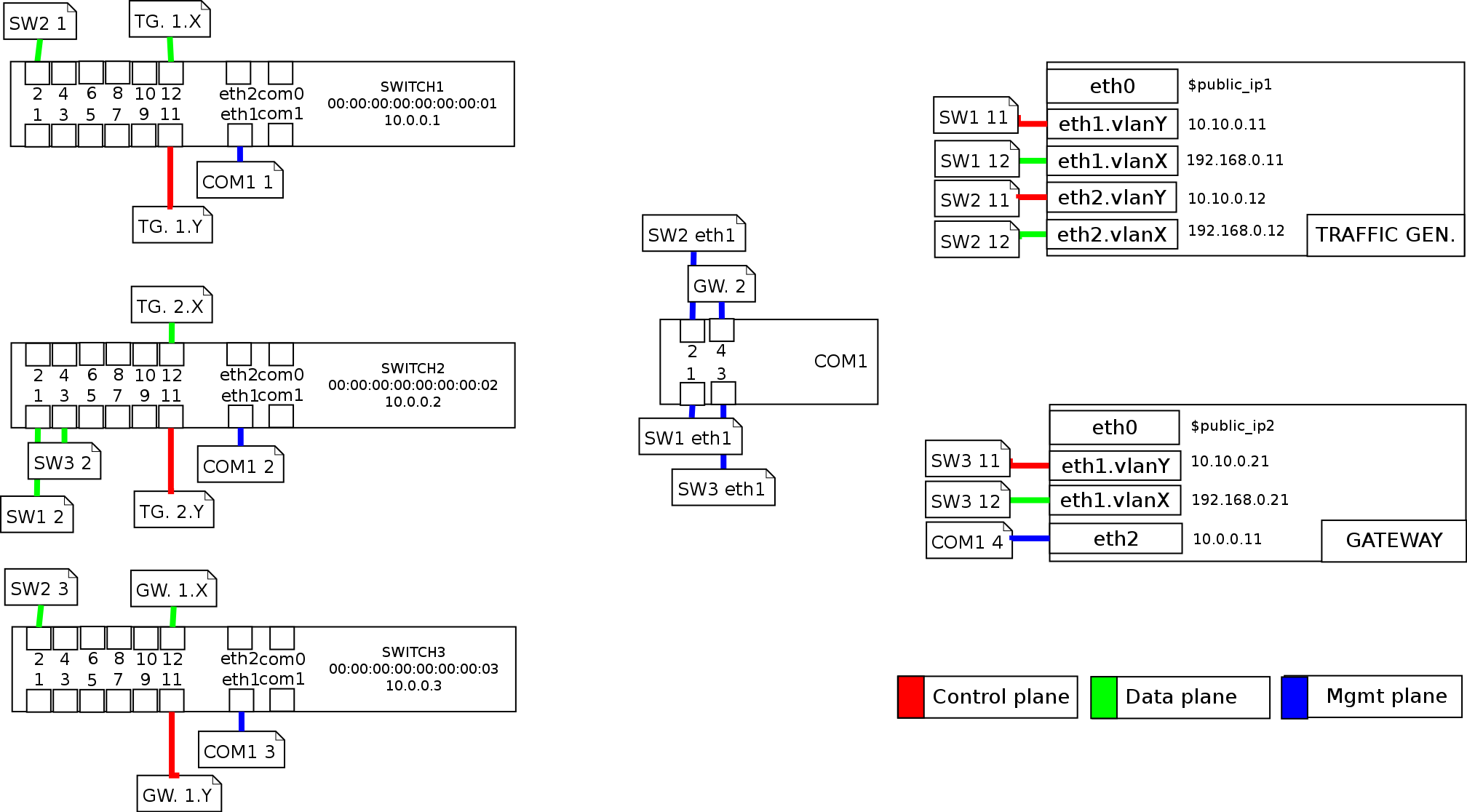

The SDN testbed must consist of the following planes or layers:

- Control plane: interact with the SDN controller to obtain information on how to forward packets

- Data plane: perform the forwarding of the (user’s) data packets

- Management plane: operate (configure or monitor) the devices

More information can be found here, here or here.

In practice, allowing the operations provided by each plane implies: (i) wiring the devices in a specific manner so that these are reachable from the SDN controller, (ii) provide a separate network for packet forwarding, spanning all network devices and (iii) allowing access to the operator and tools to configure and monitor the network devices.

Controller connectivity

The SDN controller needs a complete view on the network. This layer will be then used to send/receive signals to/from the devices. A separate section of the network can be defined for the matter.

Packet forwarding

The data plane will carry the users’ traffic. Assuming such traffic comes from a set of locations, then this data plane requires the following:

- Connectivity between devices and locations: the users will generate traffic from a number of locations. These must reach the devices

- Connectivity between devices: the networking devices will be connected to each other and forming a specific topology. These devices shall be visible to others in order to forward this traffic from end-to-end (possibly between two different locations)

Operator access

While the two layers defined take care of the forwarding information and application, this last one cares about the administrator’s access to the devices. A separate network shall be provided for the infrastructure operators to connect to the devices; as this is necessary for due configuration and monitoring. Typically, devices provide specific management interfaces that are isolated from the rest of ports.

The separation of the different layers or planes can be done by a) wiring each to different ports and interfaces, or b) by sharing ports and interfaces but separating the L2 traffic using VLAN tags.

Problematic conditions

Beware of problematic conditions; such as introducing loops into this L2 topology. This can happen easily if providing multiple connections between servers and switches, or defining a full-mesh topology. In case loops are deliberately added; these should be controlled at hardware level (enabling STP at the switches), within the SDN controller or its applications (that is, the flows sent to the devices should control this).

With the above information in mind, it is now possible to define the topology of the testbed. The style of the diagram and the information provided is up to the needs of the operators – it can range from a high-level topology view to quickly spot connected services, servers, network devices or networks; to a low-level wiring diagram, specifying port/port and port/interface connections. There are multiple tools available for drawing network diagrams, ranging from desktop applications (such as Microsoft Visio or free ones, e.g. Dia to online websites, such as draw.io).

Note that, the larger the set of connections or devices, the more tidier your design should be. That is, simple port/interface-to-port/interface wiring definition is preferred for small, spurious testing scenarios; but for larger or most stable ones, it is recommended to use labels with naming conventions and references to subsections of the network. A sample is provided below.

Wire it up

With the help of a network administrator and the design in hand, it should be easy to port that to the data centre. Whether feeling romantic or not about the old days of switchboard operators, this will be somewhat similar. Of course, SDN allows to do it once and not need anymore the tedious end-to-end manual wiring or configuration per user service!

There are some few things to keep always in mind.

Iterations

This is only to be expected. The wiring may not work at first; successive iterations may be needed in order to troubleshoot and fix network issues or improve configurations.

Organisation

After each iteration, remember to keep the diagram up-to-date with the changes and ideally do version and backup it; then make available to other operators and network administrators. This will save time to react upon failures in the wiring configuration.

The following comment is one of the data centre mantras, afaik: avoid spaghetti cabling by all means. While it is not mandatory to provide beautiful cabling arrangements, the following should be pursued (by subjective decreasing order of preference):

- Labelling: whether it is a cable paper label or wire marker; labelling the source endpoint with its destination is extremely helpful, as it saves the time to follow each cable during troubleshooting. Of course, labelling should as well be extended to devices and servers

- Grouping: if the above is achieved, cable bridle rings could be used to group these into something manageable. Ungrouped cables are more prone to detach from their endpoint and be a nuisance when managing other devices

- Colour scheme: use different colours for different types of cable. It can relate to the type of plane, to the type of inter-connectivity and so on

Configuring the devices

The configuration step differs largely based on the firmware provided by the vendor. If available, check your operation’s guide or just browse through the CLI or configuration GUIs of the devices. Common steps require to enable the device and enter configuration/operation/cli (configure) mode. Remember to save the configurations before exiting.

Controller connectivity

The devices must be configured to point to its SDN controller, as well as a list of related configuration parameters. Assuming the usage of OpenFlow, this configuration is typically achieved by entering the openflow mode and defining the IP and port (defaults to 6633 for all controllers, whilst 6653 is also supported by newer ones).

A more comprehensive list of what can be configured in the SDN-enabled switches is below:

- Primary controller address:

IP:portof the main controller where the switch should connect to - Backup controller address:

IP:portof a secondary/backup controller to use in case of connectivity failures with the primary one - OpenFlow version: depending on the support of the device, a version will be chosen here

- VLAN ID(s): VLAN tags available to the SDN controller for forwarding packets

- DPID: set custom identifier for the device

- Timeouts: timeout and interval times to wait for specific messages, such as echo-reply and echo-request

- Flow table: maximum number of flows allowed

- Other parameters referring to parameters such as MAC learning, the “emergency mode” (connection to backup controller), etc

Also, if sharing interfaces between the server hosting the SDN controller and the devices with planes other than the control plane, VLANs are probably in use. Those specific VLANs should be explicitly excluded from this configuration.

Packet forwarding

Similarly, if sharing interfaces between the locations where the users’ traffic is generated and the devices with planes other than the data plane, VLANs are probably in use. Those specific VLANs should be excluded from the configuration mentioned in the “Controller connectivity” section.

Operator access

Devices should be visible to each other and accessible from some gateway(s). In practice that means configuring the management interfaces from both switches and gateway(s) to use the same subnet.

Technical information on configuring a switch (e.g. with VLAN set-up to slice management, control and data planes) can be found at the OpenFlow original website.

Testing the traffic

After design and configuration, test shall take place to determine proper end-to-end forwarding of packets. The easiest test should do the following:

- Management plane. Access the switches. Verify proper access to all of them from the gateway(s)

- Control plane. Run the controller. Assess it has view on all connected devices

- Data plane. Configure the interfaces to be in the same subnet. Do this for the end-points, or locations from where the user submits traffic. Test connectivity

If ping is achieved; congratulations! The set-up is complete. Otherwise, in case or problems; troubleshooting should consider the following (in order of decreasing likelihood):

- Interface high-level configuration: ensure both src and dst interfaces have been assigned an IP in the same subnet. Same goes for VLANs, if in use

- ICMP packets: are these being sent from the source, and hitting the destination interface?

- ARP tables: are these being filled properly? I.e., assigning the proper MAC to the dst IP and going through the expected interface

- Firewall: the servers may have a default REJECT/DROP policy. Certain OSs, such as CentOS or RHEL, restrict connections by default – even if no iptables were configured. If this is the case, specific ports should be enabled.

- Interface low-level configuration: are the interfaces up? (e.g. use “ethtool”). Is the proper speed defined? Are these negotiating properly with the interfaces or ports these are connected to?

- Wiring check: does the physical setup match to the wiring diagram? If so, are the links up?

- Ask for help: a pair of external eyes (and knowledge set) provides a different view and focus on the issue